In this article:

- The Basics of A/B Testing for Design

- The Benefits of A/B Testing in Graphic Design

- Setting Clear Goals Before Testing

- How to Create and Implement an A/B Test for Design

- Interpreting the Results

- What Are Some Examples of A/B Testing in Design?

- Best Practices for Effective A/B Testing

- Common Mistakes to Avoid

- Designing More Confidently With A/B Testing

Designing has always been about finding that one choice that drastically changes how someone experiences your work. Sometimes, that may have to do with making a slight change so you can open up an entirely different reaction. You might change a typeface, and suddenly, the tone feels warmer. Swap a color, and a button that once blended in now begs to be clicked.

It is these moments — when tiny, thoughtful decisions flip the way people respond — that remind you how much power lives in the details. The only way to truly see those transformations is to put them out into the real world and watch what happens next.

The Basics of A/B Testing for Design

A/B or split testing is a research method for comparing two versions of a design element to see which offers the best performance outcome. In graphic design, it helps you learn which design choice is driving the reaction you are aiming for.

This testing is commonly used in other sectors like website development and marketing copy, but the focus is different in design. For instance, UX research measures how layout changes affect navigation or task completion. Meanwhile, graphic design split testing focuses on the visual elements that influence perception, emotion and engagement — things that often feel subjective until you see how they perform side by side.

Common design elements you may A/B test include:

- Logos: Shape, proportion or color variations

- Typography: Font styles, weights or hierarchy

- Color palettes: Primary colors, accents or background shades

- Imagery: Style, subject matter or cropping of visuals

- Call-to-action (CTA) buttons: Shape, size or color emphasis

An example of what this could look like reminds me of when I was designing a homepage hero banner for a client’s product launch. In Version A, I had a bold headline over a high-contrast background. Version B had the same headline, but I switched to a softer, image-based background. I tracked which version encouraged more visitors to click through, which helped me pinpoint whether the background style plays a role in driving engagement.

This type of testing gave me a way to validate my creative choices. With real-world feedback, I could learn whether my work was as effective as it was appealing.

Get 300+ Fonts for FREE

Enter your email to download our 100% free "Font Lover's Bundle". For commercial & personal use. No royalties. No fees. No attribution. 100% free to use anywhere.

The Benefits of A/B Testing in Graphic Design

A/B testing helps you make more strategic decisions, which leads to better results over time. The following ways are how it can sharpen your skills and strengthen design thinking and impact.

Data-Driven Decision-Making

Relying on gut instinct alone can be risky, especially when multiple stakeholders are involved. Split testing gives you objective evidence to support your creative choices, making it easier to justify your decisions and keep projects going.

Improving Inclusivity

Good design serves everyone, and A/B testing can ensure your work is accessible and appealing to a wide audience. With close to 70 million disabled people living in the U.S., testing variations for readability, contrast and navigation can make a huge difference in how a significant amount of users experience your creations. While it helps with compliance, your efforts can welcome as many people as possible, which enables you to gain their trust and loyalty.

Reduced Risk

Launching an untested design can feel like a leap into the unknown. By testing before a full rollout, you minimize poor performance, brand misalignment and audience confusion. This makes split testing a safeguard against these risks, which can be especially helpful in campaigns with a lot at stake.

Audience Insights

How people respond to your designs reveals a lot about their preferences and behaviors. This matters because most people will not tell you when something is not working, while only one in 26 consumers will tell you whether there is a problem. A/B testing uncovers the reason behind these drop-offs without relying on direct user feedback so you can make adjustments before losing their attention.

Setting Clear Goals Before Testing

A/B testing works best when you know what you want to learn from it. Without a clear goal, it is easy to run tests for the sake of it, which can lead to vague results that do not tell you much.

That is why it is worth spending time upfront defining the specific outcome you want to reach. This could mean aiming for more clicks on a CTA button, higher engagement on a visual or stronger readability for a block of text.

Either way, you must decide on one element to focus on at a time and identify how you will

measure success. If you test too many variables at once, it becomes nearly impossible to know which change made the difference. Keeping it focused also ensures the data you collect is relevant and actionable.

Your goals should be measurable and tied to a meaningful result. For example, instead of thinking, “I want the design to feel more dynamic,” you have to be more specific. You might want something that offers more clarity, like “increasing interaction with the homepage banner by 15%.”

This specificity sets up the test, making it easier to read the results and decide your next step. When you approach A/B testing with a defined question, you set yourself up to get answers that lead to intentional, more impactful decisions.

How to Create and Implement an A/B Test for Design

Once you have a clear goal, your next step should be to turn that objective into a structured test. Here is how to set it up to give you trustworthy results:

- Choose the design element to test: Start with a single, specific change you want to evaluate. This could be as simple as altering a button color or as involved as trying a new layout. Limiting the scope keeps the results easy to understand.

- Develop version A and version B: Version A is your current or “control” design, while Version B is your variation. Ensure each is complete enough to stand independently, as unfinished designs can create errors in the results.

- Select your audience sample: Decide who will see each version. The goal is to test on groups as similar as possible so the results align with the design change, not differences in audience behavior.

- Run the test under controlled conditions: Keep variables like timing, platform and messaging consistent between the two versions. This ensures that any difference in performance is due to the design itself rather than outside factors.

- Measure performance with the right tools: Use analytics or testing software to track your chosen metrics. Make sure your data collection method matches the goal you set at the start.

- Analyze and decide on the next step: Look at the results with your original goal in mind. Did the change achieve what you wanted? If so, you can move forward with that version. If not, you have still learned something valuable for your next iteration.

Interpreting the Results

When the numbers start rolling in, it is tempting to focus only on which version “won.” However, results are most valuable when you dig deeper to understand the context and whether the differences offer meaning. This means checking that the outcome is truly relevant to your design change, or if it was by chance.

One of the biggest challenges in A/B testing is ensuring your results are statistically significant. Without doing so, you cannot be sure if the variation’s performance would hold up if tested again.

Only 20% of tests reach 95% significance, meaning few produce certifiably repeatable results. This is why it is worth running your test long enough and using a large enough sample size while making sure your audience groups are as consistent as possible.

Finally, remember that a “losing” variation can be the feedback to build on. Even if the results are not in your favor, they tell you something about what your audience responds to and ignores. Over time, small, informed changes can reveal insights that shape stranger, more intentional designs.

What Are Some Examples of A/B Testing in Design?

A/B testing can feel abstract until you see it in action. Here are a few examples showing how different organizations apply these principles in real projects.

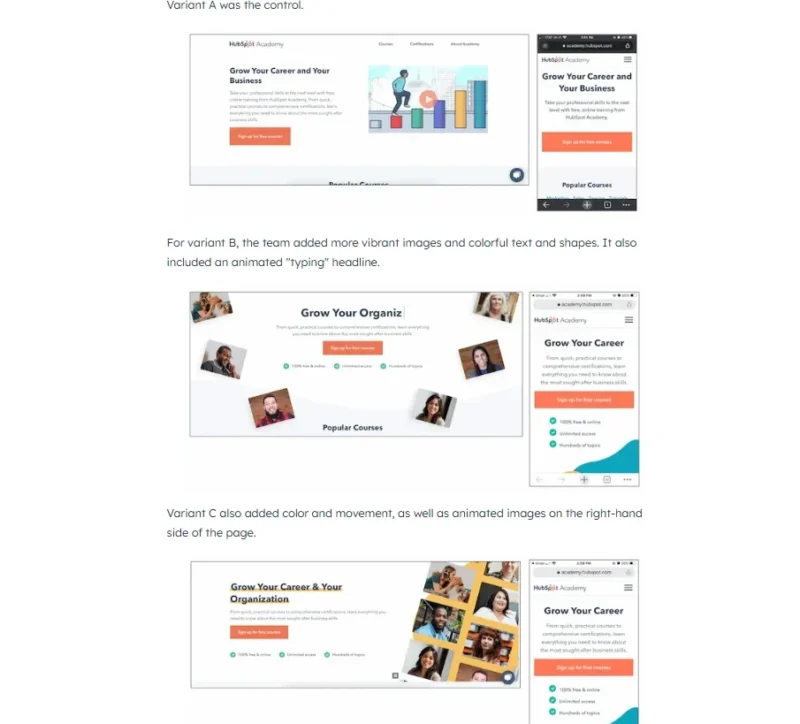

HubSpot Academy’s Measurable Impact on Its Hero Image

Source: https://blog.hubspot.com/marketing/a-b-testing-experiments-examples

HubSpot ran an experiment on its homepage hero image. Despite more than 55,000 page views, only 0.9% of users watched the video featured in the hero section, though nearly half of those who did clicked play and watched it all the way through. To change that, the team set up a multi-variant test:

- Version A remained the control.

- Version B added brighter visuals and bold, animated headlines.

- Version C layered in animated text and imagery.

Of the three outcomes, HubSpot found that Variant B outperformed the control by 6%. By making a few minor tweaks, implementing Variant B could also lead to 375 more sign-ups each month.

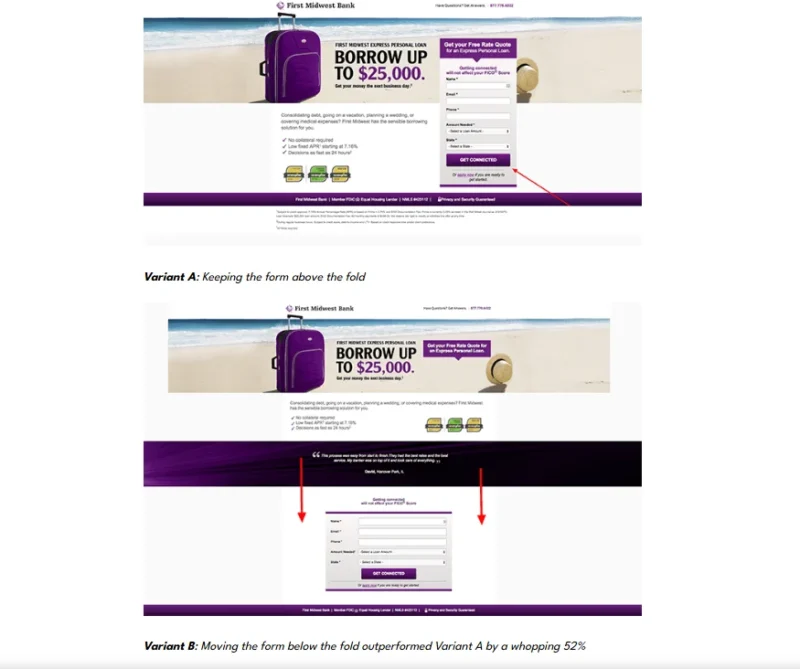

First Midwest Bank Testing Different Landing Page Approaches

Source: https://unbounce.com/a-b-testing/examples

Another case comes from First Midwest Bank, which experimented via Unbounce in a conventional industry to surprisingly creative ends. The team first tested the impact of using photographs of people, where one landing page showed a smiling face on arrival, and saw a boost in conversions, but only in certain states. Using a landing page builder can make these kinds of experiments easier to implement and optimize.

For example, a smiling man increased conversions by 47% in Illinois, yet dropped significantly by 42% in Indiana. Taking this insight further, it created 26 state-specific landing pages with tailored imagery.

It also defied conventional wisdom about forms needing to be above the fold and saw a 52% increase in conversions. It is a reminder that even widely accepted best practices may not always hold, and that nuance matters in how audiences engage with visual designs.

Best Practices for Effective A/B Testing

After seeing how A/B testing works in real scenarios, it is clear that success often comes down to the details of how you run your tests. Following a few guidelines, you can gather insights that yield conclusive results.

Best practices to keep in mind include:

- Test one change at a time: Focusing on one variable ensures you know what caused a performance difference.

- Use a large enough sample size: Small audiences can create misleading trends that conflict with a more general group.

- Keep your audience groups consistent: Similar demographics, device types and traffic sources isolate the impact of design change.

- Avoid making changes mid-test: Introducing new variables while running a test can invalidate the results.

- Document your process and results: Keeping a record of what you tested, why and what you learned builds a valuable reference for future projects.

- Test continuously: Insights compound over time, and ongoing testing helps you adapt to changes in audience behavior and trends.

Common Mistakes to Avoid

Sometimes, split testing can still fall short regardless of how well you plan it because missteps can happen. Avoiding these pitfalls will ensure you get accurate and actionable results.

Ending a Test Too Early

One frequent mistake is cutting a test short before it has gathered enough data. While A/B tests can last about 35 days on average, some can stretch to six weeks or more. However, one to two weeks can be sufficient in many cases to account for user behavior fluctuations. A general rule would be to let it run for two to six weeks to capture enough information without risking changes in user behavior.

Testing Changes With a Clear Hypothesis

Running a test to “see what happens” can produce results that are hard to interpret. Without a focused question, knowing what the data tells you and whether the change is worth implementing can be difficult.

Ignoring the Broader Context

A design element may perform well in isolation but falter when placed within the full customer journey. Looking only at a single metric without considering its downstream impact can lead to changes that help in one area but hurt another.

Designing More Confidently With A/B Testing

When you think about it, A/B testing comes down to achieving clarity in how your design performs and how each choice contributes to the bigger picture. It turns guesswork into insight, helping you make decisions backed by more than instinct. You can confidently design knowing your end product looks good and works effectively.